Trust, specifically the lack thereof, is one of the challenges currently facing the artificial intelligence industry right now. The critical underlying assumption of No trust, no use is making it difficult for developers, tech companies, and AI engineers to build trust in AI and facilitate faster adoption or usage.

Despite the many great promises AI hold, the potential dangers and the issue of not knowing how it works to make people wary of using the technology. Notable figures such as Elon Musk, Stephen Hawking, and Bill Gates have previously expressed concerns about the dangers that AI poses to humanity, with some of them going as far as speculating that AI will eventually make humans extinct.

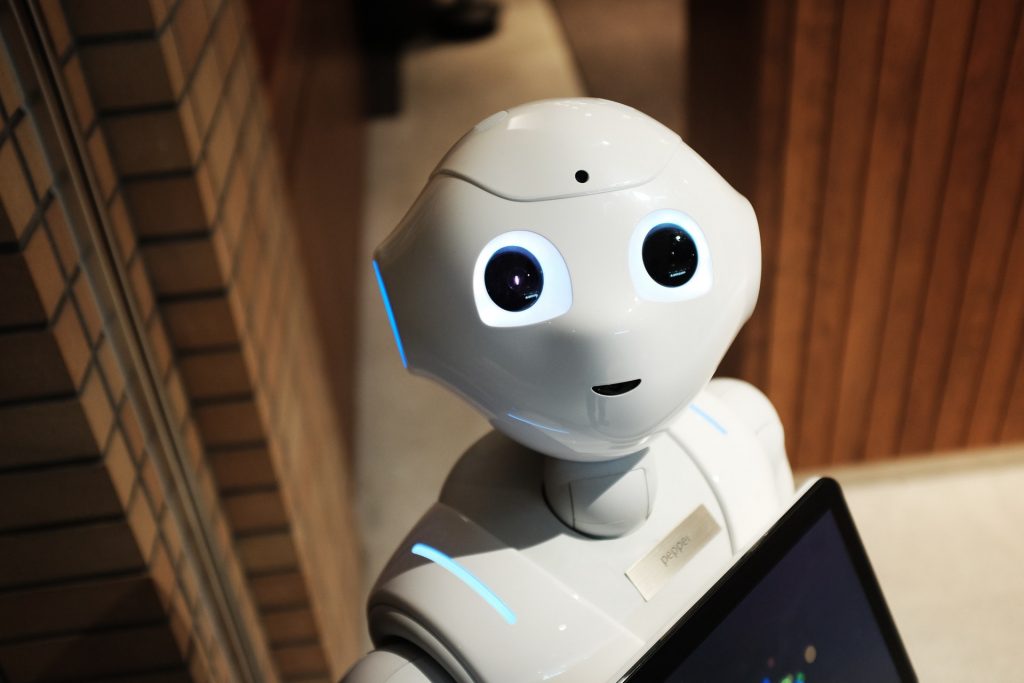

But before we delve into this controversial topic, let us first define what artificial intelligence is. Artificial intelligence, widely known as AI, is all about building machines that can think and act like a human. It has a wide and varied range of applications, some of which we interact with every day. If you’re using Siri, Alexa, or Cortana, then you’re already engaging with AI. Google’s search algorithms are also a form of artificial intelligence. Software testing automation tools, which is becoming popular among developers, are also applications of artificial intelligence. Soon, self-driving cars, independently-operating appliances, and other AI-powered machines will become a common household item.

Trust Issues

Despite the benefits, people are finding it hard to trust AI. The lack of trust is also one of the common reasons why some of the best-known AI efforts failed. IBM’s Watson for Oncology, for example, failed to impress cancer doctors during its PR attempt, causing the project to become wasted. The doctors didn’t trust Watson’s results because they didn’t understand how the machine came to its conclusions.

This begs the question; how do AIs think? An AI’s decision-making process is too complicated for ordinary people to understand. The only people who know how a particular machine works are the developers and the company that manufactured it. But the end-user usually doesn’t have any idea what goes on inside these machines’ “brains.” And it is part of human nature to feel anxious when people don’t understand something, making them feel that they are losing control.

This is how the concept of explainability becomes critical. In the case of Watson’s, if the doctors understood how Watson came up with the results, they might have had a different opinion of it and their trust level may have been higher. AI developers and tech companies should do a much better job at explaining to people how their systems work and how they arrive at certain decisions to avoid misunderstanding. Some AI systems are easy to understand, but most of them will need to work hard to simplify the explanations or ‘dumb down’ AI algorithms to make them explainable.

Unfortunately, complicated algorithms such as deep learning and machine learning will be harder to interpret, so these trust issues won’t be going away any time soon.

Machines That Think

One of the amazing, and probably scary, characteristics of AI is its ability to ‘think.’ Being able to analyze data, make deductions, and arrive at a decision based on them reveal the infinite potential of artificial intelligence. The concept of Terminator’s Skynet may not be even far off in the future. With AI’s machine learning and deep learning concepts, a world filled with self-thinking machines is getting closer to reality.

Machine learning and deep learning are common AI buzzwords, and examples of them are everywhere. It’s how Netflix predicts which flicks you’ll want to watch next or how Facebook recognizes the people in a photo.

Machine learning is defined as:

“Algorithms that parse data, learn from that data, and then apply what they’ve learned to make informed decisions.”

This concept is commonly used by services that offer automated recommendations, such as music and video streaming services. By understanding the user’s music selections and comparing it with other users who have similar preferences, the AI can list out songs or movies that the user might like. And this algorithm is designed to be constantly learning, just like a virtual assistant. The more you use the service, the more accurate the AI will be in predicting what you like.

Deep learning, on the other hand, is a subset of machine learning, except with different capabilities. Most basic machine learning models usually become progressively better at what they do, but they still need the guidance of some form. For example, if the algorithm comes up with an inaccurate recommendation, the engineer or developer has to make adjustments. With deep learning, the algorithm can decide on its own, whether the prediction is accurate or not using its neural network.

Google’s AlphaGo illustrates how powerful deep learning is. This computer program created by Google has its neural network that knows how to play the board game Go. Go requires sharp intellect and a lot of thinking, so it is amazing how AlphaGo can play against the level of professional Go players.

Should You Trust Thinking Machines?

The debate about whether AIs should be trusted or not is a hot topic in the tech world. No matter how powerful AIs are, they still lack man’s judgment capabilities, causing them to make mistakes or provide inaccurate results. The processes behind AIs thinking process is hard to explain and people tend to be wary of what they don’t know of.

Should we trust AI? The answer probably depends on these questions:

- Does it perform well?

- Is the machine safe and was it built correctly?

- Does the machine perform the way it was intended?

- Can we predict the results?

- Does the machine adhere to ethical standards?

Computers are becoming an integral part of our lives, and automation is only going to become more popular over time. It has become increasingly important to understand how these complicated AI and machine learning systems are making their decisions before people will come to trust them.

Author Bio

A Computer Engineer by degree and a writer by profession, Cathy Trimidal writes for Software Tested and Outbyte. For years now, she has contributed articles focusing on the trends in IT, VPN, web apps, SEO, and digital marketing. Although she spends most of her days living in a virtual realm, she still finds time to satisfy her infinite list of interests.